实验粒子物理计算研讨会(2024)

→

Asia/Shanghai

Pao Yue-Kong Library

Pao Yue-Kong Library

500

,

Description

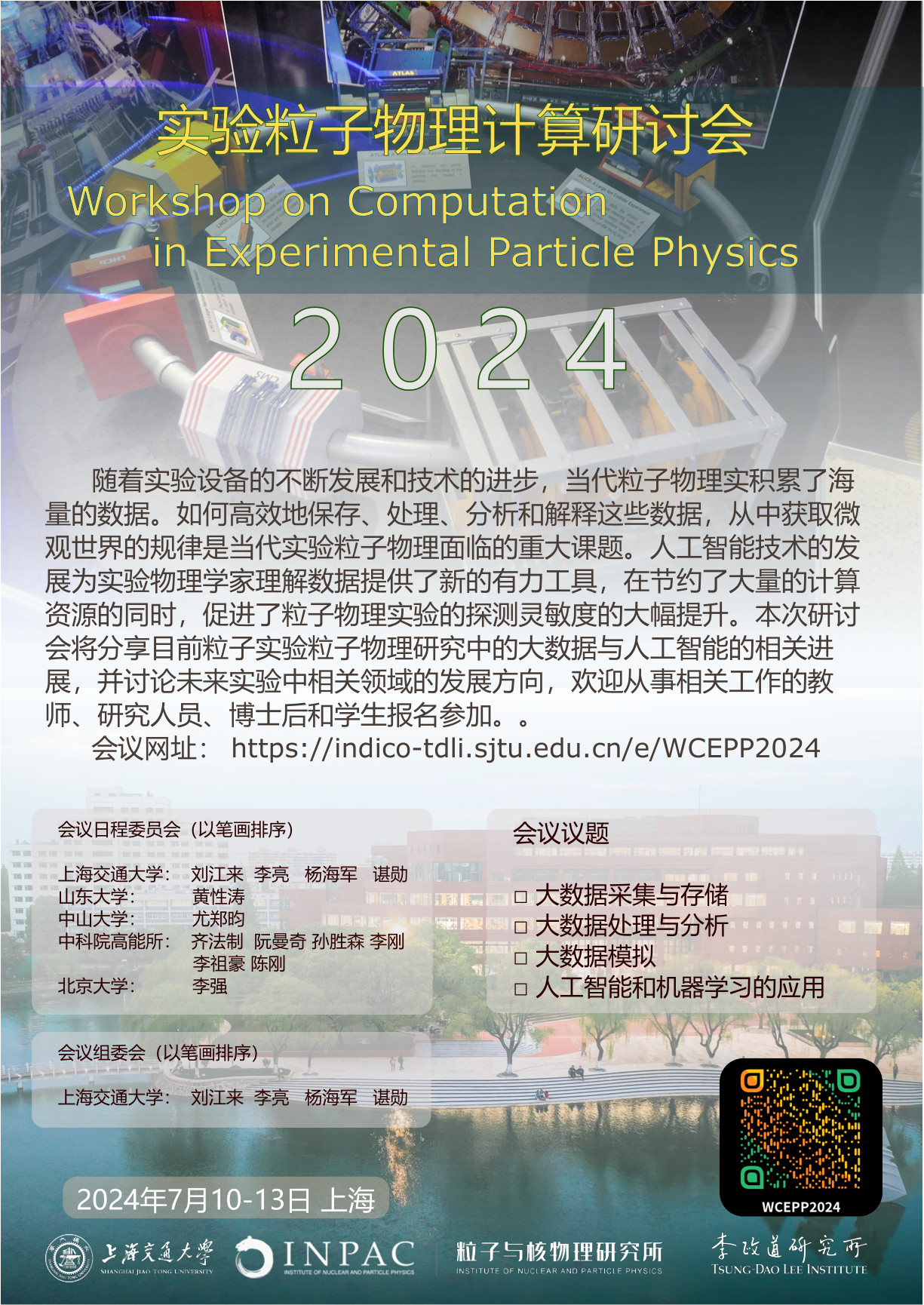

粒子物理是一门深奥而令人着迷的学科,通过研究微观世界的基本构成粒子,我们可以更好地理解宇宙的本质。然而,随着实验设备的不断发展和技术的进步,我们积累了海量的数据。如何高效地保存、处理、分析和解释这些数据,从中获取微观世界的规律是当代实验粒子物理面临的重大课题。人工智能技术的发展为实验物理学家理解数据提供了新的有力工具,在节约了大量的计算资源的同时,促进了粒子物理实验的探测灵敏度的大幅提升。

为了促进领域内相关学者之间的交流,分享研究成果、思想和经验,共同探讨未来实验粒子物理研究中的大数据与人工智能的发展方向,我们将于2024年7月10日至13日在上海交通大学举办2024年实验粒子物理计算研讨会。10日报到,11日正式开始。欢迎从事相关工作的教师、研究人员、博士后和学生报名参加。

注册费:教师及博后1500元,学生800元。家属500元/人。

会议地点:上海交通大学闵行校区包玉刚图书馆5楼报告厅

Participants

Anfeng Li

Beijiang Liu

Bo Li

Cen Mo

Congqiao Li

Da Yu Tou

Daicui Zhou

Gang LI

Haijun Yang

haiyun teng

Hongyue Duyang

Huayang Wang

Huilin Qu

Jialin Li

Jianqin Xu

Jingyan Shi

Kun Wang

Liang Sun

Liang(亮) Li(李)

Manqi RUAN

Qiang Li

Qinghua HE

Qitian Wu

Quanbu Gou

Rui Zhang

Shijie Zhang

Siyuan Song

Tailin Wu

Tao Li

Tong Sun

Xiang Chen

Xiaopeng Zhang

Xinzhu Wang

Xu Wang

Xuliang Zhu

Yangu Li

Yao Zhang

Ye Yuan

Yi Tao

Yubo Zhou

Yunxuan Song

Yuxuan Xie

Zejia Lu

Zhengde ZHANG

Zhiyuan Pei

伟燕 张

傅 逸昇

勋 谌

庄 建

建文 韦

新华 林

朝义 渠

柄志 李

梓杰 黄

祖豪 Li

胜森 孙

陈 立风

鹏飞 肖